Acoustics

The marine underwater soundscape consists of a wide range of sounds from marine animals, natural events such as waves and rainfall, and human activities like shipping. To monitor this, LifeWatch Belgium operates a broadband acoustic network in the Belgian part of the North Sea, capturing soundscape patterns across time and space. In addition, specialised sensor networks continuously track harbour porpoises, dolphins, and bats, focusing on their echolocation calls to better understand their behaviour and habitats along the coast and at sea.

Acoustics

Humans primarily use light to perceive and understand their surroundings. But what happens in environments where light is scarce, such as underwater? In marine ecosystems, sound travels far more efficiently than light, making it the primary means by which many aquatic species interact with their environment. Most underwater species rely on sound rather than vision to navigate, communicate, and survive.

Marine soundscapes offer a cost-effective, non-invasive way to monitor these environments over long periods. By passively recording sound, researchers can track geophysical events, weather patterns, human activities, and animal behaviour without disturbing the ecosystem. Comparing soundscapes over time allows for the assessment of habitat quality and environmental changes.

The broadband acoustic network continuously records sounds between 10 Hz and 50 kHz, capturing geophonic sounds, most anthropogenic noise (excluding sonar and seabed mapping technologies), and biophonic events. For higher-frequency sounds, the Cetacean Passive Acoustic Network monitors harbour porpoise clicks (120–145 kHz, typically 132 kHz). Additionally, a dedicated sensor network tracks bats, passively recording their echolocation calls, which they use for navigation and foraging.

By listening to the soundscape, we gain a deeper understanding of marine and coastal ecosystems, paving the way for better conservation and management efforts.

Organisms of interest

The broadband acoustic network captures underwater recordings that include sounds from various organisms of interest. The aim is to detect, classify, and interpret biological sound events, shedding light on how these species use their habitats and what their vocalisations reveal about the health of the ecosystem.

The Cetacean Passive Acoustic Network focuses on echolocation clicks of cetaceans such as harbour porpoises and dolphins, providing continuous insights into their presence and behaviour. Similarly, the bat detection sensor network monitors the echolocation calls of bats living or migrating along the coast and across the sea. These calls not only reveal migration patterns but also enable the identification of bat species, offering crucial data for understanding biodiversity in coastal and marine environments.

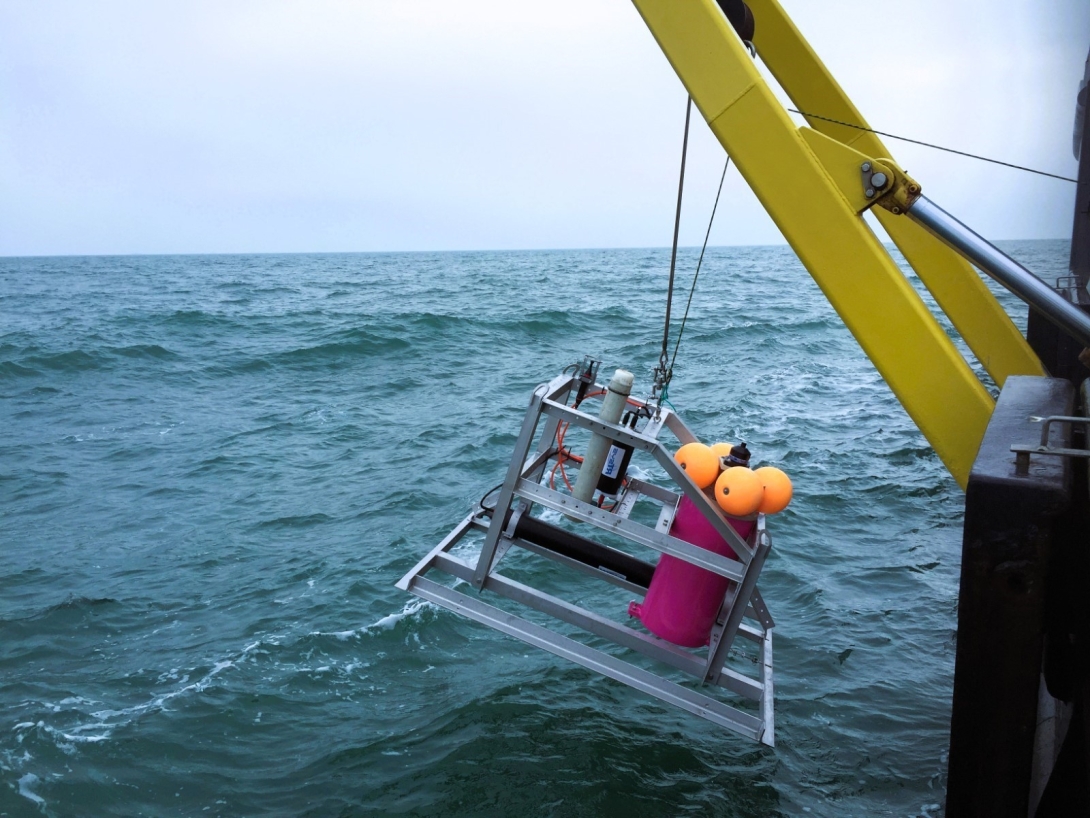

Infrastructure

The hydrophones and cetacean loggers are autonomous sensors capable of continuous recording in the field for several months. These devices are carefully maintained and deployed on tripods or multi-sensor bottom moorings, fixed upright on the seafloor. The moorings are attached to a buoy equipped with an acoustic release system, enabling the entire setup to be recovered efficiently. Data from these fixed acoustic recorders is retrieved every 3–4 months, facilitating comparisons of spatial and temporal patterns across recording sites.

To support various monitoring needs, a multi-purpose mooring was developed as part of the LifeWatch framework. Designed for use in dynamic, high-current, shallow coastal environments, this mooring accommodates multiple autonomous sensors through dedicated slots and clamps. The sensors remain securely attached for the duration of the measurement period, ensuring high-quality data while minimising the impact of environmental currents. This approach provides a non-intrusive method for studying marine ecosystems and their inhabitants. The multi-purpose mooring builds on the tripod system initially developed within the LifeWatch framework for the Fish Telemetry Network and is also equipped with an acoustic release system, ensuring complete recovery without leaving debris on the seafloor.

On land and along the coast, BatCorders continuously monitor bat activity. Powered by batteries and solar panels, these omnidirectional devices (ecoObs) record sound at a sampling rate of 500 kHz with 16-bit depth, covering a frequency range of 16–150 kHz. The full-spectrum recordings are analysed using advanced software (BatIdent), which classifies calls by species, genus, or higher taxonomic group. All classifications undergo manual validation, ensuring the accuracy of the data, which is stored in a dedicated database for further analysis.

This integrated infrastructure supports non-invasive, high-resolution monitoring of marine and coastal environments, enabling valuable insights into biodiversity and ecosystem health.

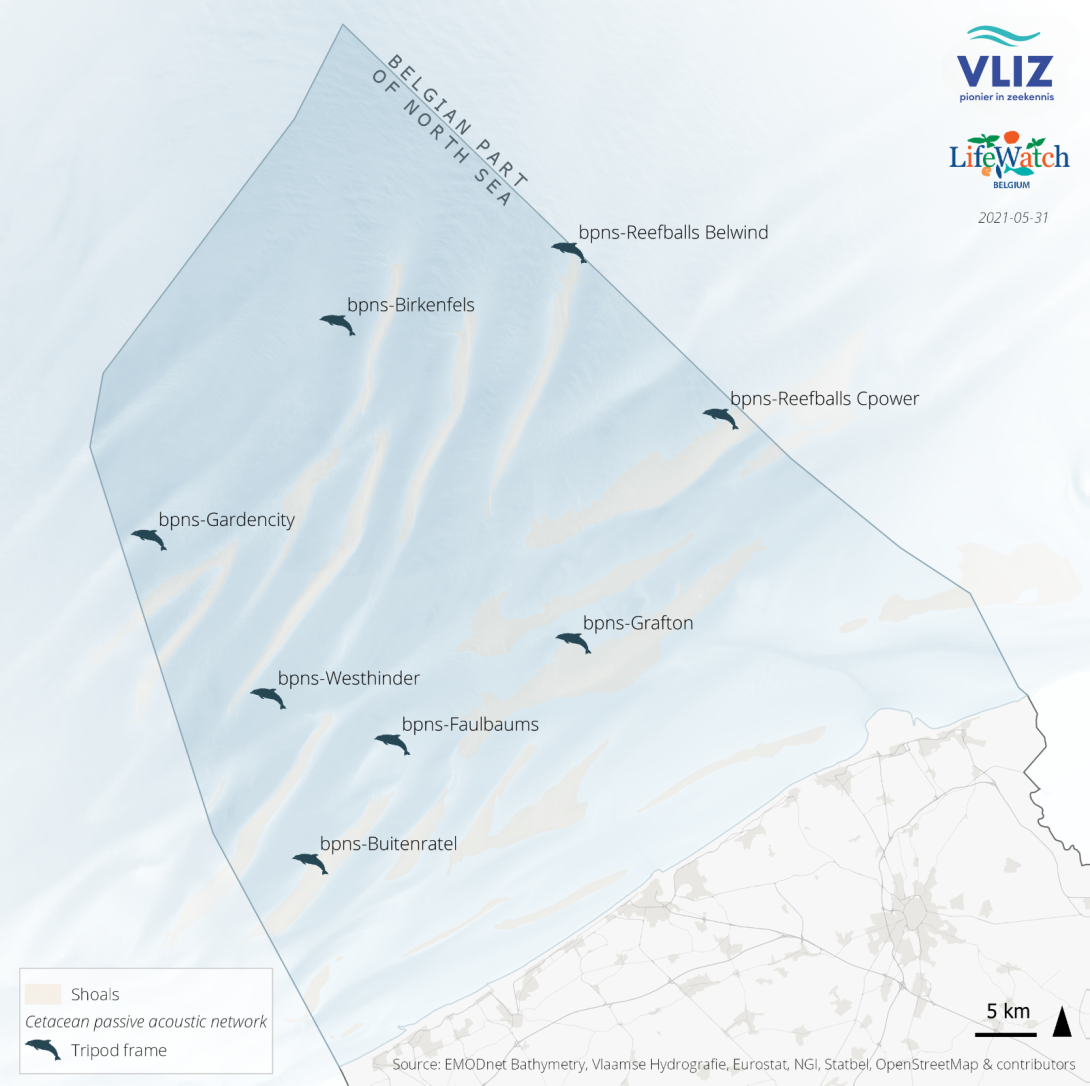

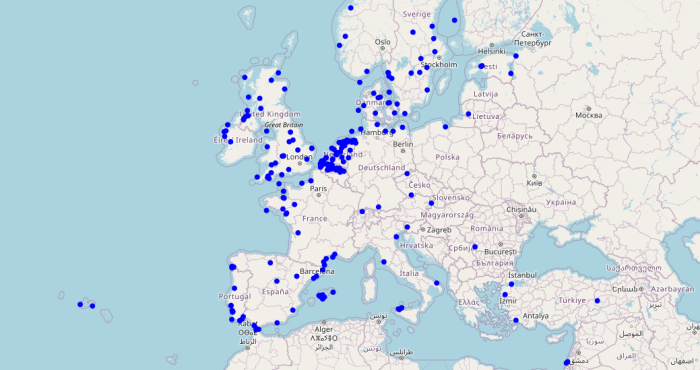

Spatial coverage

Stations of the Cetacean passive acoustic network and the broadband acoustic network in the Belgian part of the North Sea.

Relevant news

-

A Taxon-Match tool for AlgaeBase, based on the WoRMS Taxon Match

A Taxon-Match tool for AlgaeBase, based on the WoRMS Taxon Match

A taxon-match tool built on AlgaeBase is now available through the LifeWatch eLab, with the same functionalities as the WoRMS taxon-match tool. -

Tracking sharks in the North Sea for better protection and management

Tracking sharks in the North Sea for better protection and management

With support from LifeWatch Belgium and the European Tracking Network (ETN), researchers are tagging sharks in the Belgian part of the North Sea to uncover their movements and preferred habitats. Scientists from the Flanders Marine Institute (VLIZ) and the Research Institute for Agriculture, Fisheries and Food (ILVO) lead this work, using LifeWatch infrastructure to collect vital data that will help guide targeted protection and management. -

Fish Don’t Know Borders: Tracking Aquatic Life Across Europe

Fish Don’t Know Borders: Tracking Aquatic Life Across Europe

Through the European Tracking Network (ETN), LifeWatch Belgium connects researchers who track fish and other aquatic species across borders. Using a shared network of acoustic receivers, scientists follow animal movements from rivers to seas, revealing how aquatic life links ecosystems throughout Europe. -

From Europe to the Atlantic: new insights into eel migration

From Europe to the Atlantic: new insights into eel migration

European eels are legendary travelers, undertaking journeys of up to 9,000 km to spawn inthe Atlantic Ocean. A groundbreaking study led by LifeWatch researchers has broughttogether tracking data from more than 2,300 eels across 9 countries, revealing howgeography and river barriers shape the timing of this epic migration.